- Nature Paper: Certified Randomness from a trapped-ion quantum computer

- Applications of Certified Randomness

- JPMorganChase Press Release, Tech Blog

- Bloomberg Story

- Scientific American Coverage

Frequently Asked Questions

Please do reach out if you have further questions.

What is the big deal about certified randomness? Can’t I just create randomness by rolling dice, or by tossing coins?

Yes, you can certainly generate randomness by rolling dice or by tossing coins. The problem certified randomness tries to solve is that of proving randomness to a skeptical party. When you watch a lottery draw on TV, you see a person physically pulling numbered balls from a machine which mixes them; you have a visual confirmation that the numbers drawn are random. However, there are countless other processes where randomness gets used, for instance when your name gets randomly pulled up for an audit, but you don’t get to verify it. With certified randomness, anyone can verify that numbers used in applications where privacy and fairness are of utmost importance are generated from a truly random source.

What does it mean to “verify” randomness? How do you achieve this?

We would like to be able to inspect the numbers and be confident they came from a truly random source. We achieve this by requiring that the randomness comes from quantum computers which have two properties making all this possible. First, the measurement outcomes of a quantum computer executing a random quantum program (a sequence of randomly chosen operations) are truly random; this derives from the intrinsic randomness in quantum mechanics. Second, the numbers so generated are still correlated to the program the quantum computer executes. This lets us design a protocol where

- a ‘requester’ generates quantum program and sends them to a ‘generator’

- the ‘generator’ returns the outcomes of that quantum program very fast

As we receive the numbers sent by the ‘generator’, we can spot check some of them to see if they correspond to the programs used to generate them. If so, we can be fairly confident that the numbers actually came from a quantum computer, and therefore are truly random. This requires the quantum computer to have high-fidelity and low-latency.

Wait! But aren’t there other ways of generating randomness using quantum mechanics? Can’t I just use radioactive sources which I know are unpredictable? Or measure the polarization of light?

Yes, certainly. You can generate randomness by using a Geiger counter or by measuring light polarization or by detecting cosmic rays entering the atmosphere; they are all good sources of entropy. But, these sources still suffer from the problem of verifiability; such randomness cannot be verified by a third party and can easily be faked using pseudorandom generators. I can give you a set of numbers, lying that I used a quantum source when I actually used a pseudorandom generator, and you will not be able to tell the difference.

Why are pseudorandom number generators bad?

Pseudorandom generators produce a sequence of numbers that “look” random, starting from an initial seed. If you know the seed, you can predict all subsequent random numbers it generates. This may be okay if you don’t care about privacy, confidentiality, or security, but otherwise such randomness can be problematic and insecure.

The other issue with PRNG is that a sophisticated actor can engineer a backdoor in them and detecting those backdoors could be difficult. We have, as an illustration, the imfamous example of the psuedorandom generator DUAL_EC_DRBG being compromised.

I see. So you say that for random numbers to be certified, the way you do it, the numbers have to pass some “test”? Do you you know that passing the test implies that the numbers truly came from a quantum computer and not from some clever deterministic source? I mean, how easy is it to cheat your protocol?

In our protocol, we demand that the generator (which we hope is running a quantum computer) execute quantum programs. We know that it is very very difficult for a traditional computer to ‘imitate’ or ‘simulate’ a quantum computer, so it takes an extraordinary amount of resources and time for a traditional computer to run them.

In our experiment, the generator returns a random number corresponding to a program it hasn’t seen before in less than two and a half seconds. Furthermore, these numbers also pass our verification test. We know from mathematical proofs that it is almost impossible for deterministic algorithms to generate bits that pass our verification in such a short time. In particular, we verify about 70,000 bits at security $10^{-6}$, which means that even if the server is running an extremely powerful computer (say four times the largest supercomputer at the time of the experiment), the chances of it producing fewer than 70,000 bits of entropy is less than one in a million.

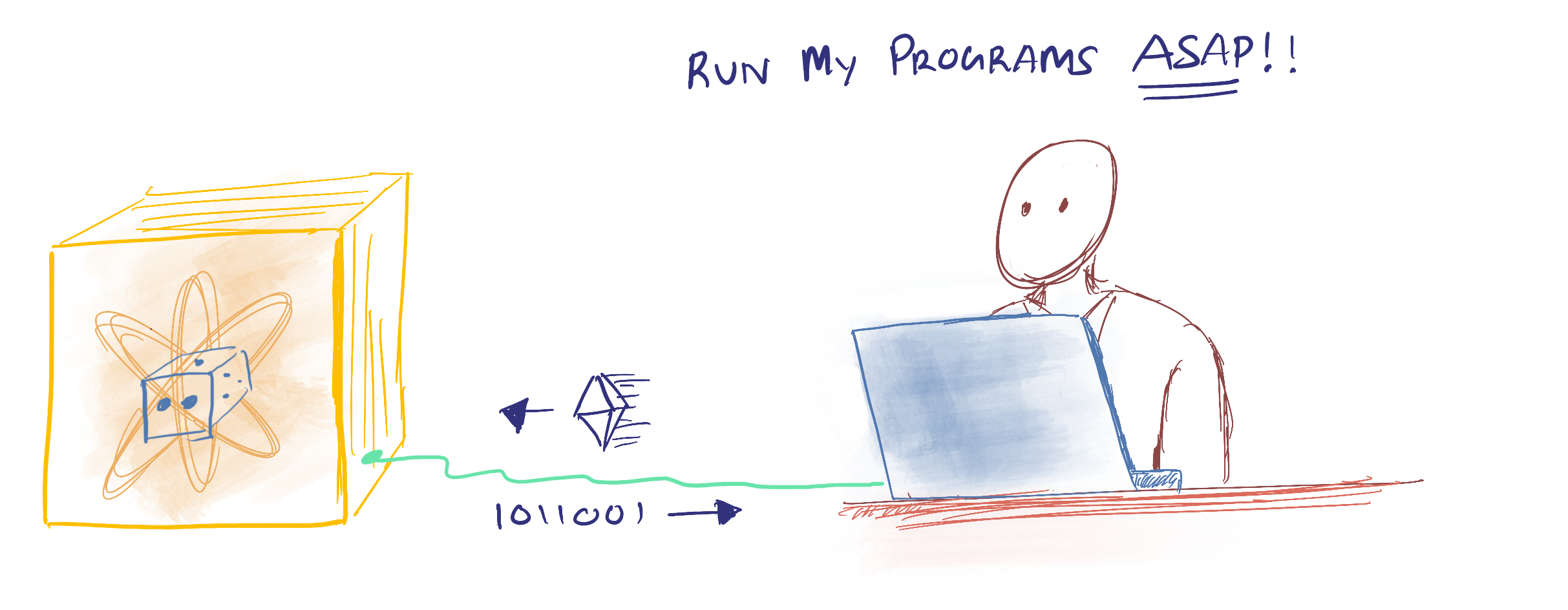

How do you talk to the quantum computer?

We interact with the quantum computer over the Internet. The quantum computer sits in Colorado. But we did the experiment from several locations in Manhattan and even the mountains of the Adirondacks during the total eclipse of 2024 (see artist’s rendition below).

Is this verification easy and cheap?

Unfortunately not at the moment. Measuring the correlation between numbers and quantum programs happens to be an expensive task. In our work, we need large supercomputers to do so. However, the good news is that this verification only has to happen once in a while. In contrast, a malicious adversary will have to continually spend resources, day in and day out, trying to cheat. This gap between the resources the user/verifier needs and what the adversary needs is at the heart of what makes this protocol possible.

In our experiment, we use Frontier (the largest supercomputer at the time of the experiment), Summit, Polaris, and Perlmutter supercomputers. Another cool thing: we perform verification at 1.1 ExaFLOPS. That’s eighteen zeros of processing power.

I still have one confusion. You say that the quantum computer executes programs it hasn’t seen before. You also say that these programs have to be random. If you already have some kind of randomness to generate these programs, then why bother with this entire protocol at all?

Really important question. Indeed, we use some initial randomness to generate these ‘challenge’ programs to the quantum computer. The benefit of our protocol is that it can provide randomness expansion, where I can use $m$ initial random bits to collect $n > m$ bits. Or, it can also provide randomness amplification where I convert a weak-entropy source to a strong-entropy source. A further advantage is that the protocol can be remotely verified by third parties. The randomness in my local computer is not easily verifiable by anyone. Bu, if you use your local randomness to implement our protocol, then you have some ‘proof’ of randomness anyone can check.

Should I be using certified randomness everywhere? Is there a point to upgrading ALL my randomness sources to this?

Given that generating certified randomness is a lot more difficult than simply rolling dice or measuring CPU noise, it may not be a practical choice for many applications, especially if the application doesn’t care about privacy, security, transparency etc. For applications where fairness is of paramount importance (for example, random auctions over the internet, or in blockchains), the use of certified randomness can add to the integrity of the application. Similarly, sometimes you may have to produce ‘proof of randomness’ in the future (“Hey, remember these decisions I randomly made a few years back, here is the proof that I actually used a truly random source”). Certified randomness is useful for those use cases.

Actually, in addition to our experimental paper, we have also written an accompanying whitepaper which outlines some possible applications of this kind of randomness.

I get a feeling that this is useful for blockchains. Is that right?

A blockchain is indeed an example where certified randomness could be invaluable. A blockchain may make decisions (such as who to assign rewards) for which it is important to convince a group of distributed users that the decision was made fairly using fair random numbers, and not, for example, manipulated to favor one particular user. Integrating certified randomness in the blockchain pipeline could add to the integrity of the chain. Our white paper provides some more details on such a use in blockchains.

How can the general public use trusted randomness? It sounds like this needs specialized equipment.

You bring up a good point. Given how ubiquitous randomness is, the idea of randomness-as-a-public-utility has been around for a while. The idea is to create a publicly accessible server or a ‘beacon’ which releases a certain number of high-entropy bits periodically or on demand. However, this still requires you to trust that whoever is operating the beacon is doing so honestly. In our whitepaper, we present a way of using certified randomness to produce a distributed beacon that anyone can verify. This would eliminate some of the trust issues present in existing randomness beacons.

Not to get too technical, but what are the underlying mathematical assumptions behind your work? I know a lot of work in cryptography come with assumptions?

Our work is based on a conjecture called Long List Hardness Assumption (LLHA) introduced by Scott Aaronson. This assumption is closely related to the hardness of Random Circuit Sampling, the experiment commonly used to establish quantum supremacy. And, there are good reasons to believe it to be true. An evidence: the conjecture has been proven to hold in the random oracle model.

Every time someone says ‘We did something using a quantum computer impossible with classical computer’, a week later, someone counters that claim with improved classical algorithms. Are you worried that your work might suffer from the same fate?

Not really. We never claim the quantum programs we use in our experiment be impossible to simulate. In fact, verification of random numbers is only possible because we can simulate these programs, albeit in time longer than what it takes a quantum computer to run the program honestly without cheating. At the time of experiemnt, there were no classical algorithms that could simulate these programs in less than ninety seconds (the quantum computer takes less than two and a half), so the results we present will hold regardless of future improvements in classical algorithms.

Furthermore, a classical algorithm to simulate quantum programs will help an adversary (by making it slightly easier to cheat) as well as the requester (who can now make the challenge programs even harder). So, the gains neutralize each other.

What is the future direction for this line of work?

As we mention in our paper, this demonstration still has room for improvement in terms of security as well as rate of entropy generation. Likewise, a primitive like certified randomness should enable new applications in cryptography and information science. We highlight some of them in our accompanying whitepaper but certainly there is much work to be done there. It would also be nice if someone could prove LLHA, or design a protocol on a different complexity assumption altogether.

Are there other ways of generating certified randomness apart from your protocol?

All other methods known prior to our work come with drawbacks. Some require you to put additional trust in the server. Others are not implementable in a near-term quantum computer and need a fully fault-tolerant machine.